Google’s "Full Stack Flex": Reflections from Cloud NEXT 2025

From cloud to chips to code agents, Google is leaning into vertical integration in its quest for AI dominance.

I was in Vegas last week at Cloud NEXT where Google unveiled new releases and products across all layers of the AI stack:

Model layer: Google’s flagship Gemini took center stage with announcements around Gemini 2.5 Flash / Pro. Updates were announced for generative media models in Vertex AI including Lyria, Veo 2, Chirp 3, and Imagen 3.

AI Infrastructure and chips: A focus on advancements in internal efforts around custom silicon (Ironwood - 7th generation TPU) coupled with deepening partnerships with external vendors (NVIDIA Vera Rubin GPUs, A4 and A4X VMs powered by NVIDIA B200 and GB200 Blackwell GPUs).

Cloud: Announcements around Cloud WAN, Google Kubernetes Engine, and Gemini availability on Google Distributed Cloud.

Agents and applications: Buzz across Google Agentspace, Agent2Agent Protocol (A2A), Agent Development Kit, Agent Garden, Agent Engine, Gemini Code Assist "Agentic" Capabilities, additional AI features within Google Workspace Tools including Docs, Sheets, and Workspace, etc.

Cybersecurity: Google Unified Security powered by AI

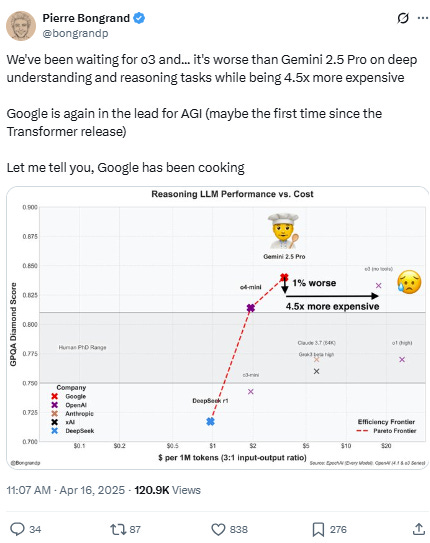

The pace and full-stack ambition of Google’s recent AI moves are hard to ignore. As I’ve previously noted, the company was slow off the blocks at the start of the AI boom, but the tech giant has undeniably awakened. It has now re-emerged as a serious contender in the AI race — or as some have put it, “Google has been cooking”:

Vertical integration always carries risk, especially when companies over-stretch beyond their core competencies. But in the context of today’s still-nascent AI market, I view Google’s move to ensure comprehensive coverage across the entire AI stack as an astute, well-timed play. This assessment extends beyond the glaringly obvious benefits of vertical integration such as efficiency, unit economics, or business resilience advantages. Rather, this vertical integration push is fortuitous because an interdependent architecture can unlock options for highly differentiated and functional user experiences during the formative stages of the AI paradigm shift.

Professor Clay Christensen’s Modularity Theory helps to explain why vertical integration can be extremely powerful (and perhaps even necessary) given the current maturity state of the AI industry. Modularity Theory proposes that:

In markets where products are not yet good enough (i.e. there is a performance gap), competitive advantage goes to interdependent builds and vertically integrated companies since customers value functionality and reliability at this point. Pursuing modular architecture would limit the degrees of design freedom when pushing on the frontier of what is technologically possible, and also add friction to performance optimizations.

In markets where products are more than good enough (i.e. there is a performance surplus), competitive advantage goes to modularization through specialization, outsourcing, and dis-integration, since this is where other factors beyond core functionality, such as speed, responsiveness, and convenience, tends to win.

Currently, I posit that we’re in the early stages of AI market formation where we’re closer to the left-hand side of the framework (diagram above) where products are not yet good enough. The pre-conditions for modular architecture to succeed, such as specifiability, verifiability, and predictability, have not yet been fully defined in the AI industry, implying that vertically integrated structures should yield significant advantages in terms of product output or customer experience as the market matures.

There are of course only a handful of companies like Google that are currently in such a prime position (with lots of cash on hand) to become fully vertically integrated in the AI age. Apple, NVIDIA, and Tesla are a few tech giants that come to mind. But from xAI building its own data centers to OpenAI making big moves within the application and tooling layer, we’re starting to see startups move in this direction as well.

Industries constantly swing between interdependence and modularity as the more advantageous strategy — nothing stays static as competitive dynamics evolve. But in the early days of this AI revolution, I expect more players will lean into vertical integration as a way to build durable advantage in an increasingly fast-moving market.

This is a very good post!

Hello Janelle, love your writing and perspective. I try to do the same on under the radar VC investment thesis : 🚀 Next in VC tracks what’s moving before it becomes mainstream.

If you’re building or sharpening investment theses, it starts here: 👉 https://nextinvc.substack.com/p/launch-of-next-in-vc-your-new-weekly?r=hoz75

✅ First episode: Why post-quantum cryptography is a now problem

✅ Second one: The rise of return-from-orbit logistics — and the multi-billion dollar business models behind it