AI’s Linux Moment: Open LLM 'Davids' vs. Closed-Source 'Goliaths'

While foundational closed-model companies have captured the bulk of VC investment into the GenAI category, more open source voices are entering the system which could roil the current paradigm

A few weeks ago, I wrote about how the AI model layer is shaping up to be one of the most strategic and competitive layers within the modern software stack. Currently, the three most highly-valued GenAI startups are closed-model companies backed by deep-pocketed financial investors and powerful strategic partners:

Cohere officially announced its $270MM Series C led by Inovia Capital yesterday, reportedly at ~$2.1-$2.2Bn valuation. The company now counts NVIDIA and Oracle amongst its backers.

Anthropic announced a $450MM Series C led by Spark Capital just several weeks ago, reportedly at a valuation close to $5Bn. Google has invested $300MM in the company.

OpenAI announced a $10Bn financing from Microsoft earlier in the year. The company was reportedly valued at~$29Bn in an April 2023 tender offer that several VC firms participated in.

The fundraisings of these prominent foundational model companies are notable against the bleak backdrop of VC activity slowdown, and the huge round sizes are striking given that $100MM+ rounds have become increasingly rare following the market downturn:

The unbridled confidence underpinning these closed-model investments is not ubiquitously felt across the VC industry. Earlier this week,

of Benchmark was asked by TechCrunch about why his firm hadn’t invested in foundational model companies when presented with the opportunity to do so, and if the large amounts of money these companies had raised was a factor. To which Grimshaw replied:“We have not found the conviction of imagining the enduring outsize market share that one of them may have. I think you see this in the open source now that’s coming out and quickly catching up. You can imagine the inputs to some of these [large language models] obviously declining in cost over time, whether that’s the amount of compute available on a chip or the cost of any chip. The knowledge obviously is diffusing, and more people are knowing how to do it and don’t need lots of money just to try and figure out how to do it. You’ve even seen the rate of depreciation in some of the folks like OpenAI’s models. Like, think about how quickly they have obsoleted all of the spend they’ve done on GPT-2 or GPT-3.”

Competitive dynamics at the model layer are in flux. Grimshaw’s response prompted me to take a deeper look into the current GenAI funding paradigm and how open-source momentum during “AI’s Linux Moment” might impact the current state.

Where are funding dollars flowing to in the GenAI gold rush?

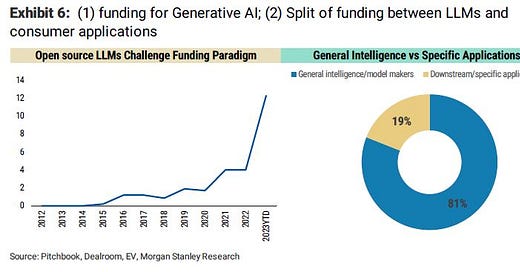

We’re currently in the midst of an AI hype cycle (chart above) so it should come as no surprise that VC investment is flooding into this space. In fact, Morgan Stanley found that year-to-date in April 2023, over $12Bn of funding had already been channeled into the GenAI category globally, which is a step-function increase from 2022:

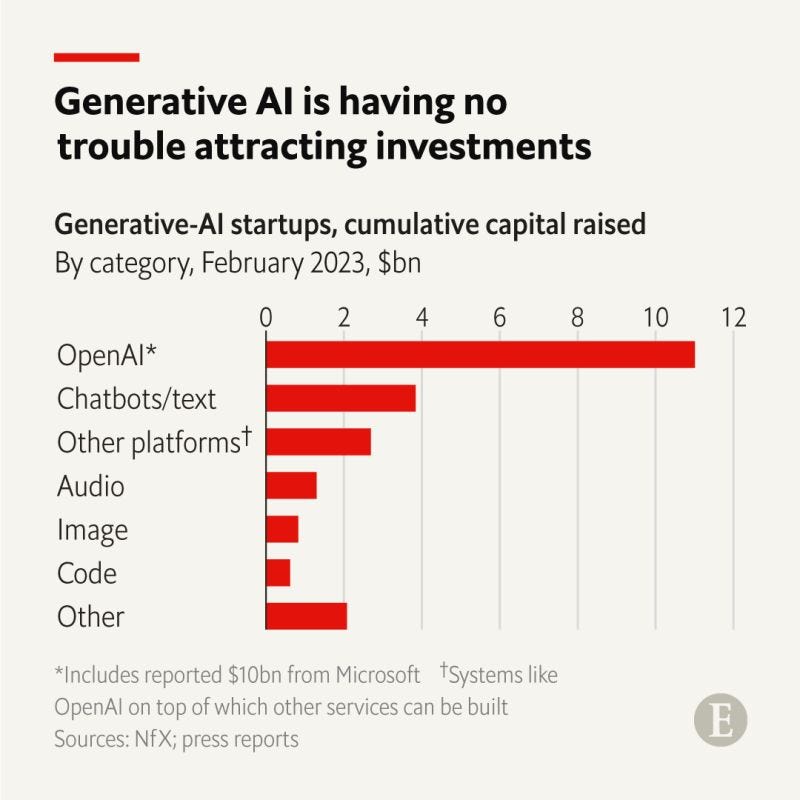

As Amara’s Law states “We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run”. Even with all the dollars already funneled into the category, AI as a technological paradigm is still early in its S-Curve and a lot remains unproven. So where has investment been flowing to? Morgan Stanley found that ~80% of GenAI investment has gone to model layer companies (chart above). I ran a similar analysis based on raw data from NFX’s GenAI market map spreadsheet and found that ~70% of investment went to model platform or MLOps companies when filtering out companies founded before 2013.

Outsized attention on this part of the stack makes intuitive sense since we’re in the early innings of GenAI adoption, and the model layer is still evolving with a few emerging leaders but no established winner yet. In a similar fashion to cloud infrastructure providers, the model layer forms the foundation for other layers of the stack, and we should expect more downstream applications to sprout as this layer matures. Additionally, training and developing LLMs is oftentimes more expensive compared to building applications, so model layer companies have higher capital requirements and tend to raise bigger rounds.

But it is important to note that even within the “hottest” slice of GenAI funding at the model layer, there also seems to be a Pareto pattern emerging. There is imbalance as the bulk of the investment dollars within this layer is being captured by a select few closed-model companies. In particular, as highlighted earlier, OpenAI with its jaw-dropping $10Bn financing announcement has dwarfed the average VC fundraising round size benchmark in 2023 (where ~70% of all venture rounds raised in 1Q23 were $10MM or less) and other model layer mega-rounds. As the chart above shows, OpenAI’s funding accounted for close to 50% of the entire pool of cumulative capital raised by GenAI startups in February 2023.

A David vs Goliath battle between open and closed models

Given the large amount of investment flowing into the model layer, is the race to dominate this part of the stack confined only to these well-capitalized closed-model vendors? Not quite.

As I’ve noted previously, many other players from Big Tech companies to non-AI native startups to academic institutions are roiling the ecosystem, leading to a long list of open-source models entering the race. And when I say long, I really do mean LONG… just check out this database of open-source LLMs (h/t to Sung Kim for maintaining this). Many of these models did not exist a mere six months ago, but now that a chain reaction has started, as many as three open models are released on a daily basis!

Chris Ré from Stanford has likened this open-source momentum to AI’s Linux moment (referencing the movement toward an open-source operating system as an alternative to Microsoft’s closed-platform Windows):

“Since the beginning of the deep learning era, AI has had a strong open-source tradition. By Linux moment, I mean something else: we may be at the start of the age of open-source models and major open-source efforts that build substantial, long-lasting, and widely used artifacts. Many of the most important dataset efforts (e.g., LAION-5B) and model efforts (e.g., Stable Diffusion from Stability and Runway, GPT-J from EleutherAI) were done by smaller independent players in open-source ways. We saw this year how these efforts spurred a huge amount of further development and community excitement.”

Everyone in the ecosystem is paying attention and re-evaluating their areas of defensibility as open LLMs are proving to be credible contenders against their more well-funded closed competitors. In the viral “We Have No Moat, And Neither Does OpenAI” leaked memo, a Google researcher asserted that open-source players are narrowing in on quality and will eventually outcompete proprietary closed models on various dimensions such as speed and cost to train:

“While our models still hold a slight edge in terms of quality, the gap is closing astonishingly quickly. Open-source models are faster, more customizable, more private, and pound-for-pound more capable. They are doing things with $100 and 13B params that we struggle with at $10M and 540B. And they are doing so in weeks, not months. This has profound implications for us.”

The memo referenced Vicuna-13B as an example, which is an open-source chatbot trained by fine-tuning LLaMA on user-shared conversations collected from ShareGPT. Vicuna-13B was able to achieve more than 90% quality of OpenAI’s ChatGPT and Google’s Bard, while outperforming its predecessors LLaMA and Stanford Alpaca in very short order (chart below). Most notably, the cost of training for Vicuna-13B was around $300 versus the millions of dollars that some have estimated is needed to train particular closed LLMs.

But the future is far from certain. Some have proposed that a “Linux moment” is not enough for open-source models to win against their closed-source counterparts as an “Apache moment” might be needed to tip the scales in their favor.

AI’s Linux Moment has added fuel to the fire

Will the most well-capitalized player triumph? Will there be a handful of winners? Will market structure stay fragmented in the long-run? There is no dull day in GenAI since the landscape is evolving faster than ever before. The recent burgeoning emergence of open-source players has injected more pressure into an already very competitive war at the model layer, but I believe this vibrancy is important since it can spur innovation and promote more democratization of AI access for downstream companies to build upon.

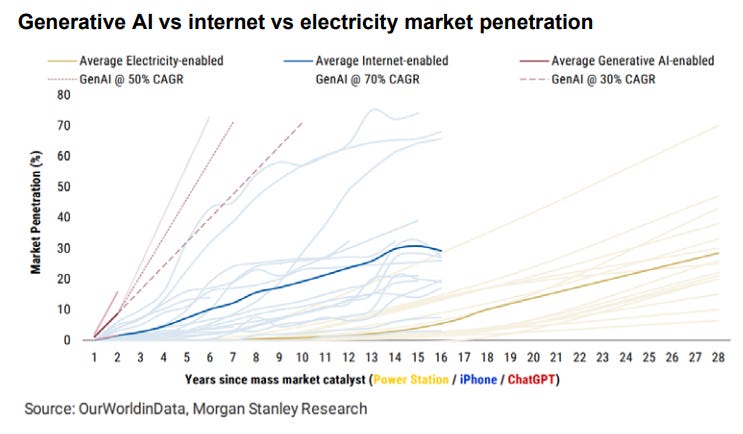

As Marc Andreesen recently wrote “AI is quite possibly the most important – and best – thing our civilization has ever created, certainly on par with electricity and microchips, and probably beyond those.” So on that note, I’ll end by leaving you with the chart above to offer a contextual anchor for the profound moment we are currently experiencing, and the unprecedented pace of adoption that might ensue in the years to come given all the fuel that is being poured onto the fire!